Written by

Chris BeckerLast updated on:

January 4, 2024Reading time:

Contents

Built for Security. Loved by Devs.

- Free Trial — No Credit Card Needed

- Full Access to All Features

- Trusted by the Fortune 100, early startups, and everyone in between

Managing a static fleet of StrongDM servers is dead simple. You create the server in the StrongDM console, place the public key file on the box, and it’s done! This scales really well for small deployments, but as your fleet grows, the burden of manual tasks grows with it.

With the advent of automated scaling solutions for our cloud environment like AWS Auto Scaling Groups, we need a way for our StrongDM inventory to change in real-time along with the underlying servers. The solution: automation automation automation!

The devops mindset is key, we want to automate cloud infrastructure so it operates without manual intervention. We can write scripts that hook into instance boot and shutdown events that will automatically adjust our StrongDM inventory accordingly.

The examples in this post are written for AWS, but all major cloud providers should provide a similar API for instance information, lifecycle hooks, and metadata-like tags.

Automation— the hooks

For server access, there are two lifecycle events that we care about: server boot and server shutdown. We are going to write scripts that hook into these events and execute StrongDM CLI commands to perform the necessary actions.

We’ll need API keys to talk to StrongDM and our cloud provider. In this case: AWS.

StrongDM and AWS API Authentication

StrongDM provides admin tokens that facilitate access to sdm admin CLI calls. The admin token has the following permissions:

On the AWS side, servers were given a programmatic (non-console) user account with one IAM policy attached. This IAM policy can also be attached to an instance role instead of embedding credentials into the script!

The policy contains one statement: allow EC2:DescribeTags. This API call is required to build out the server’s name in StrongDM.

{ "Version": "2012-10-17", "Statement": [ { "Sid": "VisualEditor0", "Effect": "Allow", "Action": "ec2:DescribeTags", "Resource": "*" } ]}Naming Convention

Server names will be dynamically built using the server’s AWS EC2 tags, with the following schema:

$APP-$ENV-$PROCESS-$INSTANCE_ID

A server with the following tags:

would result in the following name in the StrongDM inventory:

$ sdm statusSERVER STATUS PORT TYPEtestapp-stage-wweb-00deadbeef connected 60456 ssh

Startup

We've built a custom base AMI based on the Amazon Linux operating system. Our provisioning workflow includes a per-boot CloudInit script that executes when the server is powered on, including restarts. This script utilizes the StrongDM command line tool to register automatically identify the information about the server, register it with StrongDM, and install the on-demand SSH keys.

In /var/lib/cloud/scripts/per-instance/00_register_with_strongdm.shINSTANCE_ID="$(curl --silent http://169.254.169.254/latest/meta-data/instance-id)"INSTANCE_ID_TRIMMED="$(echo $INSTANCE_ID | cut -d '-' -f 2)"LOCAL_IP="$(curl --silent http://169.254.169.254/latest/meta-data/local-ipv4)"APP="$(aws ec2 describe-tags --filters Name=resource-id,Values=$INSTANCE_ID Name=key,Values=app --query 'Tags[0].Value' --output text)"ENV="$(aws ec2 describe-tags --filters Name=resource-id,Values=$INSTANCE_ID Name=key,Values=env --query 'Tags[0].Value' --output text)"PROCESS="-$(aws ec2 describe-tags --filters Name=resource-id,Values=$INSTANCE_ID Name=key,Values=process --query 'Tags[0].Value' --output text)-"SDM_SERVER_NAME="$APP-$ENV$PROCESS$INSTANCE_ID_TRIMMED"curl --silent -o sdm.zip -L https://app.strongdm.com/releases/cli/linuxunzip sdm.zipmv sdm /usr/local/bin/sdmrm sdm.zipmkdir -p "/home/sshuser/.ssh/"touch "/home/sshuser/.ssh/authorized_keys"chmod 0700 "/home/sshuser/.ssh/"chmod 0600 "/home/sshuser/.ssh/authorized_keys"chown -R "sshuser:sshuser" "/home/sshuser/"/usr/local/bin/sdm loginPUBLIC_KEY=$(/usr/local/bin/sdm admin servers add -p "$SDM_SERVER_NAME" "sshuser@$LOCAL_IP")# Touch a "lockfile" so the server can be deregistered when it's shutdown# (see shared/roles/strongdm_target/templates/remove_from_strongdm.sh.j2:10)touch /var/lock/strongdm-registeredShutdown

For removing servers from inventory, we’ve included another script into our base AMI. It is a runlevel0 script that removes the server from the StrongDM inventory when then system is halted (i.e. AWS ASG instance termination, console terminations, or restarts).

In /etc/init.d/remove_from_strongdm#!/bin/bash# chkconfig: 0123456 99 01# description: Deregister server from StrongDM at shutdown# LOCKFILE is created by the cloudinit scriptLOCKFILE=/var/lock/remove_from_strongdmstart(){# set up the loggerexec 1> >(logger -s -t $(basename $0)) 2>&1touch ${LOCKFILE}}stop(){# set up the loggerexec 1> >(logger -s -t $(basename $0)) 2>&1# Remove our lock filerm ${LOCKFILE}INSTANCE_ID="$(curl http://169.254.169.254/latest/meta-data/instance-id)"INSTANCE_ID_TRIMMED="$(echo $INSTANCE_ID | cut -d '-' -f 2)"APP="$(aws ec2 describe-tags --filters Name=resource-id,Values=$INSTANCE_ID Name=key,Values=app --query 'Tags[0].Value' --output text)"ENV="$(aws ec2 describe-tags --filters Name=resource-id,Values=$INSTANCE_ID Name=key,Values=env --query 'Tags[0].Value' --output text)"PROCESS="-$(aws ec2 describe-tags --filters Name=resource-id,Values=$INSTANCE_ID Name=key,Values=process --query 'Tags[0].Value' --output text)-"SDM_SERVER_NAME="$APP-$ENV$PROCESS$INSTANCE_ID_TRIMMED"/usr/local/bin/sdm admin servers delete "$SDM_SERVER_NAME"}case "$1" instart) start;;stop) stop;;*)echo $"Usage: $0 {start|stop}"exit 1esacexit 0User Access Model— StrongDM Okta Sync

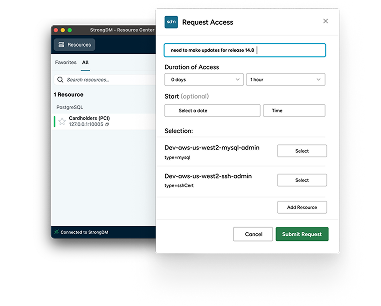

At Betterment, all of our access management lives in one single place: our identity provider. We wire up all of our authentication and access control to Okta. With this in mind, we wanted a way for Okta attributes, in our case group memberships, to propagate to server access in StrongDM. When we reached out to the engineers assisting us with the implementation, they came up with an amazing solution.

They wrote a stopgap tool for us that will lookup an Engineer’s group in Okta, and grant access to a set of servers based on a regular expression. It was a small tool written in Go that we could run on a schedule. The matching algorithm was controlled by a YAML file that allowed us to easily map Okta groups to StrongDM servers.

For example, if an engineer is added to the strongdm/testapp Okta group, they will now have access to all servers whose names start with testapp. Since our servers followed a distinct naming pattern, we were able to write well-defined regular expressions to match applications to their respective servers.

Here’s a small excerpt from the matchers.yml configuration file:

groups:- name: strongdm/testappservers:- testapp.*In our case, the YAML file was stored in source control, and a Jenkins job ran the sync every five minutes.

Simple Steps to Manage Access to Ephemeral Servers

Voilà! With a few little scripts shimmed into our instance boot and shutdown lifecycle hooks, we can rely on our dynamic infrastructure to successfully register and deregister itself from our StrongDM inventory. Small steps, like writing a few bash scripts and plugging them into an AMI, can save your operations team valuable time when working with a large-scale deployment and allow your application engineers to SSH into dynamic infrastructure with ease.

You can try StrongDM out for yourself with a free, 14-day trial.

To learn more about how StrongDM helps companies with managing permissions, make sure to check out our Managing Permissions Use Case.

Next Steps

StrongDM unifies access management across databases, servers, clusters, and more—for IT, security, and DevOps teams.

- Learn how StrongDM works

- Book a personalized demo

- Start your free StrongDM trial

You May Also Like