Add an Elastic Kubernetes Service Instance Profile Cluster

Last modified on April 7, 2025

This guide describes how to manage access to an Amazon Elastic Kubernetes Service (EKS) Instance Profile cluster via the StrongDM Admin UI. This cluster type supports AWS IAM role authentication for EKS resources and gateways running in EC2. EKS clusters are added and managed in both the Admin UI and the AWS Management Console.

Prerequisites

Before you begin, ensure that the EKS endpoint you are connecting is accessible from one of your StrongDM gateways or relays. See our Nodes guide for more information.

If you are using kubectl 1.30 or higher, it will default to using websockets, which the StrongDM client did not support prior to version 45.35.0. This can be remedied by taking one of the following actions:

- Update your client to version 45.35.0 or greater.

- Set the environment variable

KUBECTL_REMOTE_COMMAND_WEBSOCKETS=falseto restore the previous behavior in your kubectl.

Credentials-reading order

During authentication with your AWS resource, the system looks for credentials in the following places in this order:

- Environment variables (if the Enable Environment Variables box is checked)

- Shared credentials file

- EC2 role or ECS profile

As soon as the relay or gateway finds credentials, it stops searching and uses them. Due to this behavior, we recommend that all similar AWS resources with these authentication options use the same method when added to StrongDM.

For example, if you are using environment variables for AWS Management Console and using EC2 role authentication for an EKS cluster, when users attempt to connect to the EKS cluster through the gateway or relay, the environment variables are found and used in an attempt to authenticate with the EKS cluster, which then fails. We recommend using the same type for all such resources to avoid this problem at the gateway or relay level. Alternatively, you can segment your network by creating subnets with their own relays and sets of resources, so that the relays can be configured to work correctly with just those resources.

Cluster Setup

Manage the IAM Role

- In the AWS Management Console, go to Identity and Access Management (IAM) > Roles.

- Create a role to be used for accessing the cluster, or select an existing role to be used.

- Attach or set the role to what you are using to run your relay (for example, an EC2 instance, ECS task, EKS pod, and so forth). See AWS documentation for information on how to attach roles to EC2 instances, set the role of an ECS task, and set the role of a pod in EKS.

- Copy the Role ARN (the Amazon Resource Name specifying the role).

Grant the Role the Ability to Interact With the Cluster

- While authenticated to the cluster using your existing connection method, run the following command to edit the

aws-authConfigMap (YML file) within Kubernetes:

kubectl edit -n kube-system configmap/aws-auth

- Copy the following snippet and paste it into the file under the

data:heading, as shown:

data:

mapRoles: |

- rolearn: <ARN_OF_INSTANCE_ROLE>

username: <USERNAME>

groups:

- <GROUP>

In that snippet, do the following:

- Replace

<ARN_OF_INSTANCE_ROLE>with the ARN of the instance role. - Under

groups:, replace<GROUP>with the appropriate group for the permissions level you want this StrongDM connection to have (see Kubernetes Roles for more details).

Example:

- Replace

data:

mapRoles: |

- rolearn: arn:aws:iam::123456789012:role/Example

username: system:node:{{EC2PrivateDNSNameExample}}

groups:

- system:masters

The name under groups: in the mapRoles block must match the subject name in the desired ClusterRoleBinding, not the name of the ClusterRoleBinding itself. For example, if a default EKS cluster has a ClusterRoleBinding called cluster-admin, with a group named system:masters, then the name system:masters must be input in the mapRoles block under groups:.

In the following example of the default ClusterRoleBinding for cluster-admin on an unconfigured EKS cluster, you can see that the group name under Subjects is system:masters.

Name: cluster-admin

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

Role:

Kind: ClusterRole

Name: cluster-admin

Subjects:

Kind Name Namespace

---- ---- ---------

Group system:masters

Also note that in the YML file, the indentation is critically important. If the indentation is wrong, the Edit command does not trigger an error message, but the change fails. Note that mapRoles should be at the same indent level as mapUsers in that file.

- Save the file and exit your text editor.

Add Your EKS Instance Profile Cluster in the StrongDM Admin UI

Log in to the Admin UI and go to Infrastructure > Clusters.

Click the Add cluster button.

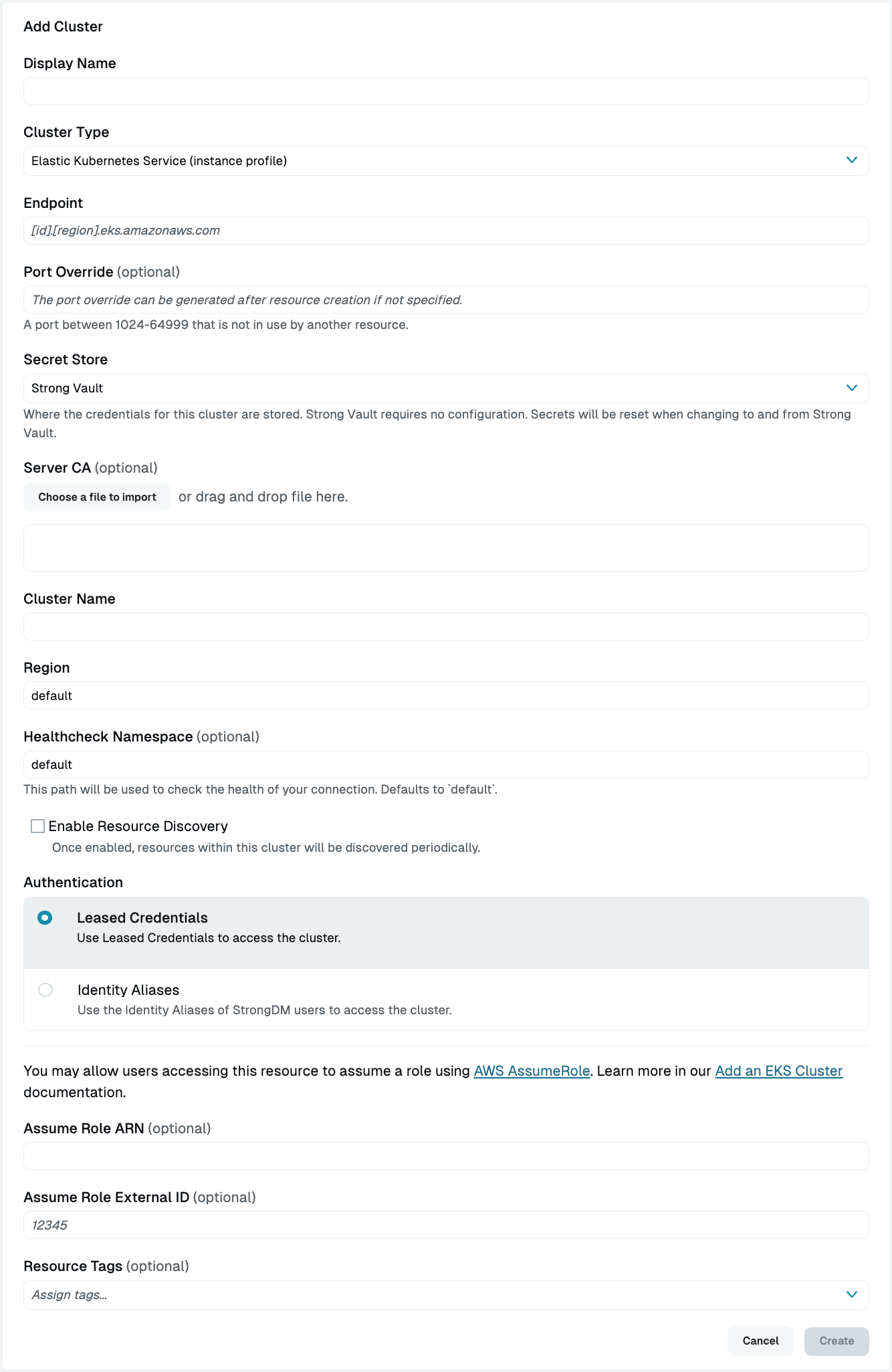

Select Elastic Kubernetes Service (instance profile) as the Cluster Type and set other resource properties to configure how the StrongDM relay connects.

Elastic Kubernetes Service Instance Profile Cluster Setup in Admin UI Click Create to save the resource.

The Admin UI updates and shows your new cluster in a green or yellow state. Green indicates a successful connection. If it is yellow, click the pencil icon to the right of the server to reopen the Connection Details screen. Then click Diagnostics to determine where the connection is failing.

Resource Properties

Configuration properties are visible when you add a Cluster Type or when you click to view the cluster’s settings. The following table describes the settings available for your EKS Instance Profile cluster.

| Property | Requirement | Description |

|---|---|---|

| Display Name | Required | Meaningful name to display the resource throughout StrongDM; exclude special characters like quotes (") or angle brackets (< or >) |

| Cluster Type | Required | Select Elastic Kubernetes Service (instance profile) |

| Proxy Cluster | Required | Defaults to “None (use gateways)”; if using proxy clusters, select the appropriate cluster to proxy traffic to this resource |

| Endpoint | Required | API server endpoint of the EKS cluster in the format <ID>.<REGION>.eks.amazonaws.com, such as A95FBC180B680B58A6468EF360D16E96.yl4.us-west-2.eks.amazonaws.com; relay server should be able to connect to your EKS endpoint |

| IP Address | Optional | Shows up when a loopback range is configured for the organization; local IP address used to connect to this resource using the local loopback adapter in the user’s operating system; defaults to 127.0.0.1 |

| Port Override | Optional | Automatically generated with a value between 1024 to 59999 as long as that port is not used by another resource; preferred port can be modified later under Settings > Port Overrides; after specifying the port override number, you must also update the kubectl configuration, which you can learn more about in section Port Overrides |

| Secret Store | Optional | Credential store location; defaults to Strong Vault; to learn more, see Secret Store options |

| Server CA | Required | Pasted server certificate (plaintext or Base64-encoded), or imported PEM file; you can either generate the server certificate on the API server or get it in Base64 format from your existing Kubernetes configuration (kubeconfig) file |

| Cluster Name | Required | Name of the EKS cluster |

| Region | Required | Region of the EKS cluster, such as us-west-1 |

| Healthcheck Namespace | Optional | If enabled for your organization, the namespace used for the resource healthcheck; defaults to default if empty; supplied credentials must have the rights to perform one of the following kubectl commands in the specified namespace: get pods, get deployments, or describe namespace |

| Enable Resource Discovery | Optional | Enables automatic discovery within this cluster |

| Authentication | Required | Authentication method to access the cluster; select either Leased Credential (default) or Identity Aliases (to use the Identity Aliases of StrongDM users to access the cluster) |

| Identity Set | Required | Displays if Authentication is set to Identity Aliases; select an Identity Set name from the list |

| Healthcheck Username | Required | If Authentication is set to Identity Aliases, the username that should be used to verify StrongDM’s connection to it; username must already exist on the target cluster |

| Assume Role ARN | Optional | Role ARN, such as arn:aws:iam::000000000000:role/RoleName, that allows users accessing this resource to assume a role using AWS AssumeRole functionality |

| Assume Role External ID | Optional | External ID if leveraging an external ID to users assuming a role from another account; if used, it must be used in conjunction with Assume Role ARN; see the AWS documentation on using external IDs for more information |

| Resource Tags | Optional | Resource tags consisting of key-value pairs <KEY>=<VALUE> (for example, env=dev) |

Display name

Some Kubernetes management interfaces, such as Visual Studio Code, do not function properly with cluster names containing spaces. If you run into problems, please choose a Display Name without spaces.

Client credentials

When your users connect to this cluster, they have exactly the rights permitted by this AWS key pair. See AWS documentation for more information.

Server CA

How to get the Server CA from your kubeconfig file:

- Open the CLI and type

cat ~/.kube/configto view the contents of the file. - In the file, under

- cluster, copy thecertificate-authority-datavalue. That is the server certificate in Base64 encoding.

- cluster:

certificate-authority-data: ... SERVER CERT BASE64 ...

Secret Store options

By default, server credentials are stored in StrongDM. However, these credentials can also be saved in a secrets management tool.

Non-StrongDM options appear in the Secret Store dropdown menu if they are created under Settings > Secrets Management. When you select another Secret Store type, its unique properties display. For more details, see Configure Secret Store Integrations.