Written by

Maile McCarthyLast updated on:

May 11, 2022Reading time:

Contents

Built for Security. Loved by Devs.

- Free Trial — No Credit Card Needed

- Full Access to All Features

- Trusted by the Fortune 100, early startups, and everyone in between

Cloud environments present new challenges for data protection as technological innovation, flexibility, and abstraction change the way we copy, store, and handle data. That’s why Justin McCarthy, CTO and co-founder of StrongDM, recently sat down with Mike Vizard and a panel of technology experts. They discussed the unique difficulties modern computing has introduced, the virtues and limits of automation for data protection, and the need for standardization as a way to bring security into development workflows.

The full panel included:

- Bill Reese — Product Marketing Director at Everbridge

- Christopher Rogers — Technology Evangelist at Zerto

So, how can you protect your data in cloud-native computing environments? Here’s the recap:

What Are the Challenges of Cloud-native Data Protection?

The growing adaptation of microservices, serverless computing frameworks, containers, and the systems that make up cloud native have changed how DevOps teams think about data protection. The scenarios are different. The people are different. And how we think about data protection should be different as well.

Ransomware attacks have changed the landscape of how companies deal with data protection. It’s become a crucial part of a cybersecurity strategy. Developers, security, and ops teams all have a common goal: to keep whatever application is running safe and secure. In cloud-native environments, data protection is no longer an afterthought.

Cloud-native data protection must consider:

- Stateful vs. stateless applications.

- Intellectual property online.

- Storing data on multiple platforms.

- Disaster recovery and backup.

- Balancing compliance restraints (like a consumer’s right to be forgotten).

But it actually takes a lot of work to get this basic stuff right.

As a general rule, having multiple copies of your data (as long as they’re properly secured) seems like a good thing. But the flexibility of cloud-native architecture leads to situations where it’s very easy to have more copies of your data than you expect.

Consider customer data. If it’s stored in two different locations, and a company asks you to purge the data, you can go back and purge it in only those two places. But if you take an entire application or entire microservices example, you must determine what exactly needs to be saved, protected, copied, and moved. How would you answer that purge request in the presence of all of these copies?

Is Automation the Answer?

When setting up a DevOps workflow to include data protection, automation clearly plays a role. What does that look like?

According to Bill Reese, “It comes down to — what is the ultimate goal? What are we trying to do with [our information]? And then how are we going to go in and protect it at the end of the day?” Whether and how to use automation will depend on what type of data it is and what type of service. Chris Rogers advocates implementing data protection as code. This type of automation helps move data protection into the hands of developers (who understand Kubernetes, cloud native, etc.) rather than relying on a separate ops team to handle data protection as a separate piece.

But ultimately, a human operator does need to be involved in debugging, diagnosing, and interacting with the cluster, server, or database — even the database running inside of Kubernetes. Automation is an essential tool for cloud-native data protection, but you’ve got to be explicit in specifying what humans do with that protected data.

Is Standardization in Our Future?

In so many organizations, you’ll see four or five different data protection solutions with different vendors that were acquired at different times by different teams for different reasons. Will we ever achieve the idea of one platform and one console to manage all these disparate use cases? How will this impact speed and automation?

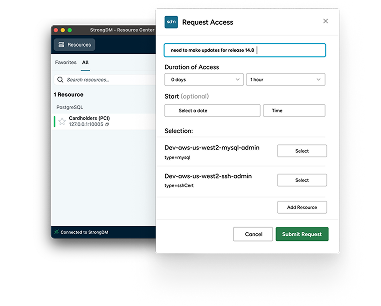

At StrongDM, says Justin McCarthy, “we think about standardizing audited access to systems, and that’s what we do all day long. So all the access is audited in a very consistent way. That’s the human access side of it. Hugely important piece.” But he hopes the future will bring more conventions and standardization with how keys are handled, (as opposed to how keys are handled in S3 versus Azure versus some vanilla k8s cluster...).

Bill adds, “I think overall, we will get to that single pane of glass, so to speak … I think it’s going to take a little bit of best-in-breed to get there though.”

Until then, the best solution is to practice drill and test, test, test.

Did you miss the panel? No problem. You can check out the replay. And if you need help building data protection into your DevOps workflows, come on over to StrongDM for a free demo.

Next Steps

StrongDM unifies access management across databases, servers, clusters, and more—for IT, security, and DevOps teams.

- Learn how StrongDM works

- Book a personalized demo

- Start your free StrongDM trial

About the Author

Maile McCarthy, Contributing Writer and Illustrator, has a passion for helping people bring their ideas to life through web and book illustration, writing, and animation. In recent years, her work has focused on researching the context and differentiation of technical products and relaying that understanding through appealing and vibrant language and images. She holds a B.A. in Philosophy from the University of California, Berkeley. To contact Maile, visit her on LinkedIn.

You May Also Like