What Are Microservices in Kubernetes? Architecture, Example & More

Written by

StrongDM TeamLast updated on:

March 25, 2025Reading time:

Contents

Built for Security. Loved by Devs.

- Free Trial — No Credit Card Needed

- Full Access to All Features

- Trusted by the Fortune 100, early startups, and everyone in between

Microservices make applications more scalable and resilient, and Kubernetes is the backbone that keeps them running smoothly. By orchestrating containers, handling service discovery, and automating scaling, Kubernetes simplifies microservices management—but it also introduces complexity. This guide covers key principles, deployment strategies, and security best practices to help you navigate microservices in Kubernetes. Plus, see a modern way of simplifying access and security, so your teams can build faster—without compromising control. Let’s dive in.

What Are Microservices in Kubernetes?

Microservices in Kubernetes are a cloud-native approach to building applications where services are broken down into small, independent, and loosely coupled components. Each microservice runs in its own container, has its own data store, and communicates with other services through APIs.

Understanding Microservices Architecture

From Monolithic to Microservices

Moving from a monolithic application to microservices requires careful planning and systematic decomposition of your codebase. Rather than a complete overhaul, successful transitions often begin by identifying loosely coupled components within the monolith that can become independent services. This approach allows teams to maintain system stability while gradually breaking down complex solutions into manageable parts.

Development teams can implement the strangler pattern, where newer versions of functionality run alongside the original monolith. By routing specific requests to these microservices while maintaining the underlying infrastructure, organizations can validate their approach before expanding the transformation. This method reduces risk and provides opportunities to refine the deployment process through continuous integration.

The trade-offs between monolithic and microservices architectures demand thoughtful consideration of your specific needs. Multiple microservices offer greater flexibility and scalability but introduce new challenges in service coordination and deployment complexity.

Core Principles of Microservices Design

Successful microservices architecture depends on fundamental design principles that shape how services interact and operate. Each microservice must maintain a single responsibility, focusing on specific business capabilities rather than trying to handle multiple functions. This approach allows teams to develop, deploy, and scale services independently.

Key principles for effective microservice design include:

- Service Independence: Design services to operate autonomously, with their own data stores and processing capabilities. This prevents cascade failures and enables individual scaling.

- API-First Development: Define clear service interfaces before implementation, ensuring consistent communication patterns across your virtual machines and containers.

- Data Sovereignty: Each service manages its own data, preventing direct database access from other services and maintaining clear boundaries.

- Resilient Communication: Build services that handle failures gracefully through circuit breakers and fallback mechanisms, making them robust tools for the job.

Modern microservices require careful consideration of their network boundaries and IP address management to maintain security and performance. Teams should implement versioning strategies that allow services to evolve while maintaining compatibility with existing consumers.

The Role of Containers in Microservices

Containers serve as the perfect deployment vehicle for microservices, providing standardized environments that ensure consistent operation across development and production. Their lightweight nature allows each microservice to run independently with its own runtime environment and dependencies, eliminating conflicts between services.

Modern container platforms enable rapid scaling and efficient resource utilization, making them ideal for microservices architectures. When a microservice needs more resources or requires an update, containers can be quickly spun up or replaced without affecting other services, maintaining system stability.

The synergy between containers and microservices extends beyond deployment. Containers create clear boundaries between services, enforcing the principle of service independence while providing built-in mechanisms for version control and rollback capabilities.

Kubernetes Microservices Example

To better understand how microservices operate in Kubernetes, consider a real-world example of an e-commerce platform. This platform consists of multiple independent services, each responsible for a specific function:

- User Service – Manages user authentication and profiles.

- Product Service – Handles product listings and inventory.

- Order Service – Processes customer orders and payments.

- Notification Service – Sends email and SMS notifications.

Each of these services runs in its own container and communicates through well-defined APIs. Kubernetes orchestrates these microservices by:

- Service Discovery & Load Balancing – When a user places an order, Kubernetes automatically routes requests between the

Order ServiceandProduct Service, ensuring high availability and optimal performance. - Auto-scaling – During peak shopping periods, Kubernetes automatically scales the

Order ServiceandNotification Serviceto handle increased traffic. - Rolling Updates – The development team deploys updates to the

Product Serviceusing a rolling update strategy, ensuring zero downtime. - Security & Access Control – Role-Based Access Control (RBAC) ensures only authorized services can interact with the

User Service, preventing unauthorized access.

This example highlights the power of Kubernetes in managing microservices at scale, providing automated deployment, self-healing, and robust networking to build resilient applications.

Why Use Kubernetes for Microservices?

Benefits of Container Orchestration

Modern development teams face growing complexity in managing containerized applications at scale. Container orchestration transforms this challenge by providing automated control over deployment, scaling, and operations across your entire infrastructure.

The power of orchestration lies in its ability to handle complex operational tasks automatically. When your application needs more resources, orchestration platforms dynamically adjust container placement and resource allocation. This automation extends to health monitoring, where the system detects and replaces failed containers without human intervention.

Development teams gain robust scheduling capabilities that optimize resource usage across your infrastructure. By defining deployment rules and constraints, you ensure containers run on the most appropriate nodes while maintaining consistent performance. The orchestration layer also manages networking between containers, simplifying service-to-service communication in your microservices architecture.

💡Make it easy: Over-privileged admin accounts are a security risk. StrongDM’s Just-In-Time (JIT) Access ensures engineers get the exact privileges they need—nothing more, nothing less—eliminating standing credentials.

Service Discovery and Load Balancing

Dynamic microservices environments demand robust mechanisms for locating and connecting services. When pods spin up or down, your network needs to maintain seamless communication without manual intervention. Kubernetes handles this through built-in DNS-based service discovery, ensuring pods can find and communicate with each other using consistent service names rather than brittle IP addresses.

Load balancing works hand-in-hand with service discovery to distribute traffic effectively across available pods. Service objects act as stable endpoints, abstracting away the complexity of pod lifecycle management while providing automatic load distribution. This approach maintains high availability by routing requests only to healthy pods and scaling smoothly as demand changes.

Network policies and service mesh implementations enhance these capabilities further, offering granular control over service-to-service communication patterns and advanced traffic management features.

💡Make it easy: Enforcing consistent security policies across hybrid cloud environments is difficult. StrongDM’s Cedar-based policy enforcement standardizes security controls across AWS, GCP, Azure, and on-prem Kubernetes clusters.

Automated Scaling and Self-healing

Modern Kubernetes platforms excel at maintaining optimal application performance through automated scaling and self-healing capabilities. When workload demands increase, the Horizontal Pod Autoscaler adjusts replica counts automatically, while the Vertical Pod Autoscaler optimizes resource allocation for individual pods. This dynamic scaling ensures efficient resource utilization without manual intervention.

The platform's self-healing mechanisms continuously monitor container health and automatically respond to failures. When a pod becomes unresponsive, Kubernetes immediately creates a replacement while removing the failed instance. This automated recovery process extends beyond individual containers to entire nodes, where workloads are automatically rescheduled to maintain service availability.

These automated capabilities transform how teams manage production environments, shifting focus from reactive troubleshooting to proactive application development. With Kubernetes handling routine scaling and recovery tasks, development teams can concentrate on building robust features that drive business value.

Deploying Microservices on Kubernetes

Setting Up Your First Kubernetes Cluster

Getting your first Kubernetes cluster running requires careful planning and the right toolset. Cloud providers like Google Kubernetes Engine offer managed options that handle control plane maintenance, while self-hosted solutions give you complete control over your infrastructure.

For development environments, tools like Minikube or K3s provide lightweight alternatives that run locally. These solutions help you understand cluster components without the complexity of production setups. Your choice depends on factors like team expertise, scalability needs, and resource constraints.

Configure your cluster with proper node pools and networking to support your microservices. Set up role-based access control early to manage permissions effectively, and implement monitoring tools to track cluster health from day one.

Container Image Management Best Practices

Proper container image management forms the foundation of secure and efficient Kubernetes deployments. Base images require careful evaluation for security vulnerabilities and unnecessary components that could expand your attack surface. Users benefit from implementing multi-stage builds to create lightweight images, removing development tools and intermediate artifacts from the final container.

Regular scanning and version control of container images help prevent deployment of compromised or outdated code. Pull images only from trusted registries, and enforce strict tagging policies beyond simply using "latest" tags. Implement immutable tags to ensure consistency between development and production environments.

Configure pod security policies to restrict privileges and prevent containers from running as root. When building custom images, follow the principle of least privilege - include only the components your application needs to run. These practices create a robust foundation for your microservices architecture while maintaining security and performance.

Deployment Strategies and Patterns

Selecting the right deployment strategy shapes how your microservices handle updates and maintain availability. Rolling updates provide a balanced approach by gradually replacing pods with newer versions, minimizing downtime while managing risk. Blue-green deployments offer zero-downtime updates through parallel environments, though they require more resources.

For more complex scenarios, canary deployments enable controlled testing by routing a portion of traffic to new versions. This approach helps validate changes in production while maintaining system stability. Consider these key deployment patterns:

- Progressive delivery: Use feature flags and traffic splitting to control feature rollout

- Shadow deployments: Test new versions with production traffic without affecting users

- Multi-stage rollouts: Combine strategies to match your risk tolerance and business needs

Each pattern brings specific benefits to your microservices architecture, from improved reliability to faster feedback cycles. Match your deployment approach to your application's requirements and team capabilities.

How Do Microservices Communicate in Kubernetes?

Inter-service Communication Patterns

Microservices in Kubernetes rely on two fundamental communication approaches: synchronous and asynchronous patterns. Synchronous communication works through direct service-to-service calls, where one service waits for another's response before proceeding. This pattern suits real-time operations like user authentication or payment processing.

Asynchronous communication enables services to exchange information without waiting for immediate responses. Through message brokers and event streams, services can maintain independence while ensuring reliable data exchange. For example, when processing orders, an order service might emit events that inventory and shipping services consume independently.

Both patterns support specific use cases within your architecture. Synchronous calls provide consistency for critical operations, while asynchronous communication helps build resilient, loosely coupled systems that can handle temporary service outages or high-load scenarios.

API Gateway Implementation

Managing microservices traffic requires a robust API gateway to handle external requests effectively. Your gateway serves as the primary entry point, controlling how client applications interact with your services while enforcing security policies and managing traffic flow.

Set up routing rules that map external endpoints to internal microservices, ensuring clean separation between public APIs and backend implementations. Configure authentication at the gateway level to validate requests before they reach your services, reducing the authentication burden on individual microservices.

Modern API gateways support advanced features like request transformation and response caching. Transform incoming requests to match your internal service protocols, and cache frequently accessed responses to reduce latency. When implementing rate limiting, consider both global limits and service-specific thresholds to protect your infrastructure from overload.

Service Mesh Architecture

A service mesh fundamentally transforms how microservices interact by creating a dedicated infrastructure layer for service-to-service communication. This architectural pattern separates network logic from application code, allowing developers to focus purely on business functionality while the mesh handles communication complexities.

The mesh deploys lightweight proxies alongside each service, forming a sidecar pattern that intercepts all network traffic. These proxies manage critical functions like service discovery, load balancing, and circuit breaking without requiring changes to the application code. Through centralized policy enforcement, teams can implement consistent security controls and traffic management across their entire microservices ecosystem.

By abstracting network concerns into a unified control plane, service mesh architecture significantly reduces operational complexity while enhancing observability and reliability. This approach proves particularly valuable in dynamic Kubernetes environments where services frequently scale and evolve.

Scaling Kubernetes Microservices

Horizontal vs Vertical Scaling

Understanding when to scale up versus scale out shapes the effectiveness of your microservices deployment strategy. Vertical scaling enhances individual pod resources, allowing services to handle increased workloads by adding CPU power or memory to existing instances. This approach works well for stateful applications that require consistent instance identity.

Horizontal scaling takes a different path by creating additional pod replicas to distribute workload across multiple instances. Each replica maintains the same resource limits while the Horizontal Pod Autoscaler manages the number of instances based on demand. This method particularly benefits stateless microservices that can run in parallel.

Your choice between these scaling approaches depends on your application architecture and resource constraints. While vertical scaling provides quick performance boosts for resource-intensive workloads, horizontal scaling offers better fault tolerance and can handle larger traffic variations through load distribution.

Resource Management and Optimization

Effective resource management in Kubernetes requires balancing performance with cost efficiency. Pod resource requests and limits serve as the foundation for proper allocation, ensuring each microservice receives necessary computing power without overconsuming cluster resources.

Resource quotas at the namespace level help teams maintain control over their microservices footprint. By implementing these quotas, organizations prevent resource contention between different applications and teams sharing the same cluster. Quality of Service (QoS) classes further refine how Kubernetes handles resource allocation, determining which pods maintain priority during resource constraints.

Configure your workloads with right-sized resource specifications through careful monitoring and adjustment. When pods consistently use less than requested resources, you can adjust their specifications downward. Conversely, pods terminating due to resource constraints signal the need for increased allocations or improved application optimization.

Security Best Practices for Kubernetes Microservices

Access Control and Authentication

Managing access in a microservices environment presents unique security challenges beyond traditional authentication methods. Zero Trust principles require validating every request between services, regardless of its origin within the cluster.

Role-Based Access Control forms the foundation of secure microservices deployment, allowing teams to define granular permissions based on service identities and responsibilities. Implement multi-factor authentication at key access points while using service accounts to manage automated processes and inter-service communication.

Modern Kubernetes environments benefit from automated certificate management and rotation, reducing the risk of expired credentials compromising service communication. Build authentication chains that validate both user identities and service accounts, ensuring consistent security across your entire microservices architecture.

💡Make it easy: Kubernetes RBAC is rigid and complex. StrongDM dynamically syncs RBAC policies in real time, preventing misconfigurations and ensuring least-privilege enforcement.

Network Security Policies

Network policies serve as the firewall rules of Kubernetes, defining how pods communicate within and across cluster boundaries. These policies control both ingress and egress traffic through granular rules that specify allowed connections based on pod labels, namespaces, and IP ranges. Modern security demands zero-trust networking where all pod-to-pod communication requires explicit permission.

Well-crafted network policies create isolation zones between workloads, preventing unauthorized lateral movement between microservices. For example, a backend API pod can be configured to accept traffic only from specific frontend services, while blocking all other incoming requests. This granular control extends to external traffic, where policies can restrict outbound connections to approved endpoints.

Proper network segmentation through these policies forms a critical defense layer, enabling teams to maintain compliance requirements while supporting dynamic microservices architectures. When combined with pod security standards and proper namespace isolation, network policies create comprehensive barriers against potential security breaches.

Secrets Management

Proper secrets management requires a comprehensive approach beyond basic password storage. Kubernetes secrets provide encrypted storage for sensitive data like API keys, certificates, and database credentials, ensuring applications can access necessary authentication details without exposing them in code or configuration files.

External secrets managers enhance this capability by providing advanced features like automatic rotation, version control, and audit logging. These tools integrate seamlessly with Kubernetes through custom resource definitions, allowing teams to maintain centralized control while enabling automated secret distribution across multiple clusters.

Role-based access controls combined with encryption at rest protect secrets throughout their lifecycle. Implement pod security policies to prevent unauthorized access to secrets volumes, and use dynamic secret injection to ensure applications receive only the credentials they need during runtime.

💡Make it easy: Manual secrets management introduces risks. StrongDM secures Kubernetes secrets while ensuring audit-ready access controls that meet SOC 2, HIPAA, and PCI-DSS compliance requirements.

Debugging and Troubleshooting Microservices

Monitoring and Observability

Debugging microservices demands visibility beyond basic performance metrics. Effective observability combines logs, traces, and metrics to reveal the complex interactions between services, enabling teams to quickly identify and resolve issues before they impact users.

Distributed tracing sheds light on request flows across your microservices landscape, helping pinpoint bottlenecks and latency issues. By correlating trace data with container logs and performance metrics, teams gain deep insights into service behavior and dependencies.

Advanced monitoring tools enhance kubernetes observability by automatically discovering new services and tracking their health. These platforms help teams understand resource utilization patterns, detect anomalies in service communication, and maintain optimal performance across the entire microservices ecosystem.

Common Issues and Solutions

Microservices running on Kubernetes face several persistent challenges that require systematic resolution approaches. Pod scheduling failures often stem from resource constraints or node availability issues, while network connectivity problems can disrupt service-to-service communication patterns.

Configuration drift between environments leads to unexpected behavior when deploying across development and production clusters. Resolve these issues by implementing robust health checks and readiness probes that accurately reflect service states. For networking challenges, proper service discovery mechanisms and well-defined network policies ensure reliable communication paths.

Resource optimization demands careful monitoring of CPU and memory usage patterns, combined with appropriate quota settings that prevent resource exhaustion while maintaining service availability. When troubleshooting complex issues, examine pod logs and events systematically to identify root causes and implement lasting solutions.

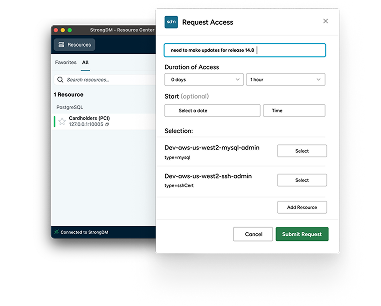

StrongDM’s Approach to Securing Kubernetes Microservices

Kubernetes simplifies deploying and managing microservices, but without strong security and access controls, these distributed systems can become vulnerable to unauthorized access, data leaks, and lateral movement attacks. StrongDM’s Zero Trust Privileged Access Management (PAM) solution provides comprehensive security and access control to safeguard Kubernetes environments:

- Granular Access Management: Control access to Kubernetes clusters, ensuring only authorized users and services interact with sensitive workloads.

- Dynamic Role-Based Access Control (RBAC): Assign and enforce least-privilege access dynamically to reduce security risks.

- Audit Logging & Observability: Maintain full visibility over who is accessing what, when, and why—providing real-time insights into potential threats.

- Secrets Management & Secure Authentication: Prevent plaintext credentials from being exposed in Kubernetes manifests by integrating with vaults for secure key distribution.

- Zero Trust Networking: Enforce strict network policies and just-in-time access to protect microservices from unauthorized lateral movement.

By securing Kubernetes access and enforcing Zero Trust principles, StrongDM helps organizations build scalable, resilient, and secure cloud-native applications. Book a demo today to see how StrongDM can enhance security across your Kubernetes infrastructure.

Frequently Asked Questions

What is Kubernetes and why does it matter?

Kubernetes is an open-source container orchestration platform that automates deployment, scaling, and management of containerized applications. It originated from Google's Borg system and has become the standard for cloud-native development due to its scalability, load balancing, and access control features.

What are the key components of Kubernetes architecture?

Kubernetes follows a master-worker model:

- Control Plane (Master Nodes): Manages the cluster via the API server, etcd (state storage), scheduler, and controller manager.

- Worker Nodes: Run workloads using kubelet (container management), kube-proxy (networking), and the container runtime (e.g., Docker or containerd).

- Additional Features: Supports custom resources and operators for extending functionality.

How Kubernetes enables modern software development

Kubernetes simplifies application deployment and scaling by:

- Automating resource allocation with Horizontal Pod Autoscaler

- Ensuring high availability with health checks and readiness probes

- Managing infrastructure complexities like persistent storage, networking, and security

This enables developers to focus on code while Kubernetes handles orchestration.

Next Steps

StrongDM unifies access management across databases, servers, clusters, and more—for IT, security, and DevOps teams.

- Learn how StrongDM works

- Book a personalized demo

- Start your free StrongDM trial

Categories:

About the Author

StrongDM Team, Universal Privileged Access Authorization (UPAA), the StrongDM team is building and delivering a Zero Trust Privileged Access Management (PAM), which delivers unparalleled precision in dynamic privileged action control for any type of infrastructure. The frustration-free access stops unsanctioned actions while ensuring continuous compliance.

You May Also Like